I kept rebuilding the same AI plumbing. Add Anthropic. Add OpenAI. Add Gemini. Wire up tool calls. Handle retries. Parse structured outputs. Each time I got better. But the knowledge never carried over.

So I built the thing I wanted: an orchestration layer as a REST API. Along the way I 10x’d my understanding of prompting. Not from reading. From building and hitting walls.

The Problem

Every project starts the same. I need an AI feature, so I wire up API calls and ship it. Three months later I’m doing it again. And again.

Each time I rebuild the same stuff:

- Provider adapters that normalize responses across OpenAI, Anthropic, Gemini

- Retry logic with exponential backoff

- Tool calling loops

- Result normalization

- Streaming

I’d solve a problem, nail the retry logic, get tool execution right. Then never touch that code again. Next project, start from scratch.

The knowledge never compounds.

What I wanted was one API I could call from anywhere. Python, Node, whatever. POST /chat with messages and tools. Same interface for Claude, GPT-5, Gemini. Same retry behavior. Same results.

So I built it. REST API. Maintain it once, use it everywhere. I also built a web UI on top of the same API for my own research and writing. The API is the product. The UI is just a client.

Amp Was the Spark

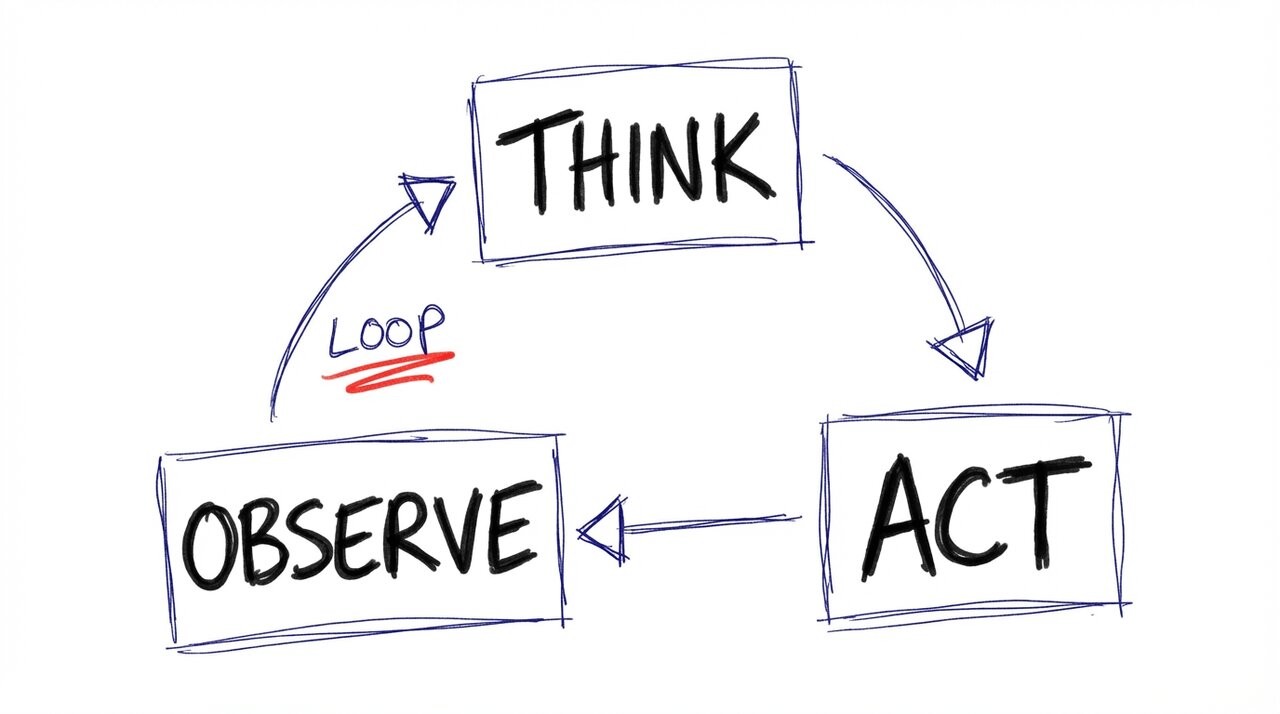

First time I watched Amp call a tool, wait for results, then decide what to do next based on what it found, something clicked. Not chat with function calling bolted on. A loop. Think, act, observe, repeat.

Amp lives in the terminal though. I wanted persistent threads. Attachments. Spin off sub-tasks and come back days later. So I built my own version. Used Amp to help me do it. The irony wasn’t lost on me.

The loop is the thing. Call the model. If it wants a tool, run the tool. Feed results back. Repeat until done. Everything else is just infrastructure around that loop.

The Core Loop

LLMs don’t do things. They decide things.

The orchestrator turns decisions into actions.

def execute_loop loop do result = call_llm(messages, tools: available_tools)

if result.tool_calls? messages << result.to_assistant_message # Record the tool request tool_results = dispatch_all(result.tool_calls) messages.concat(tool_results.map(&:to_tool_message)) # Add results else messages << result.to_assistant_message break # Done - LLM gave us text end endendEach iteration the LLM sees more context until it has enough to respond.

Some tools run in parallel (web searches, calculations). Others run sequentially (side effects). The dispatcher partitions them:

def dispatch_all(tool_calls) parallel_calls, sequential_calls = tool_calls.partition do |tc| ToolRegistry.find(tc.name).parallel_safe? end

# Run parallel-safe tools concurrently threads = parallel_calls.map { |tc| Thread.new { dispatch(tc) } } results = threads.map(&:value)

# Run sequential tools one at a time sequential_calls.each { |tc| results << dispatch(tc) }

resultsendTools define capabilities. web_search finds current info. read_web_page fetches URLs. task spawns sub-agents. Each tool is a contract.

Skills take this further. If you’ve used Amp or Claude Code, you’ve seen SKILL.md files. Markdown that injects instructions into the system prompt. A well-defined pattern now. My system works the same way. A skill is a reusable prompt module with a trigger, instructions, and optionally tools it unlocks. Same orchestrator can behave like a researcher, coder, or writing assistant depending on which skills are active.

Catch: every tool and skill costs tokens. Context stopped being abstract and became a hard limit.

Context Is a Budget

Mistake I made early: treated context like a bucket I could keep filling. Conversations grow. More system prompt. More tool definitions. More history. Eventually the model loses the thread. Doesn’t break obviously. Just gets vague. Misses details. Hallucinates.

Fix: treat context as a budget. Every token counts. You need thresholds.

THRESHOLDS = { warn_user: 0.80, # Yellow light - "You're running low" block_input: 0.90, # Red light - "Can't add more" force_spin_off: 0.95 # Escape valve - "Time to start fresh"}

def status pct = usage_percentage

if pct >= THRESHOLDS[:force_spin_off] :force_spin_off elsif pct >= THRESHOLDS[:block_input] :block_input elsif pct >= THRESHOLDS[:warn_user] :warn_user else :ok endend80% warning. 90% block input. 95% force a spin-off.

Spin-offs are inspired by Amp handoffs. Conversation hits limit, smaller model extracts essential context, seeds a fresh thread. Child starts light. No bloated history.

The question: what does the model need to decide right now? Not what might be useful someday. Current task, constraints, background. That’s it.

Deliberate allocation, not passive accumulation. Sharper responses because the model isn’t sifting through noise.

The Real Product

Models will keep getting better. Context windows will grow. New providers will emerge. None of that changes what I actually built.

I built a place where my understanding lives. A model excels at a specific task? Route that task to it. A faster, cheaper model drops? Swap it in. A prompting pattern clicks? Encode it. Every insight gets baked into the platform. The models are interchangeable. The orchestration layer is mine.

That’s the thing about building infrastructure for yourself: you stop being a consumer of AI and start being an operator. You see the seams. You understand the constraints. You know exactly why something works or doesn’t.

I’m not chasing prompts anymore. I’m building a runtime that gets smarter every time I use it.

Building things compounds in strange ways.