I’m not quitting my job tomorrow.

But I know what I want next and I’m putting it in writing.

Closed mouths don’t get fed.

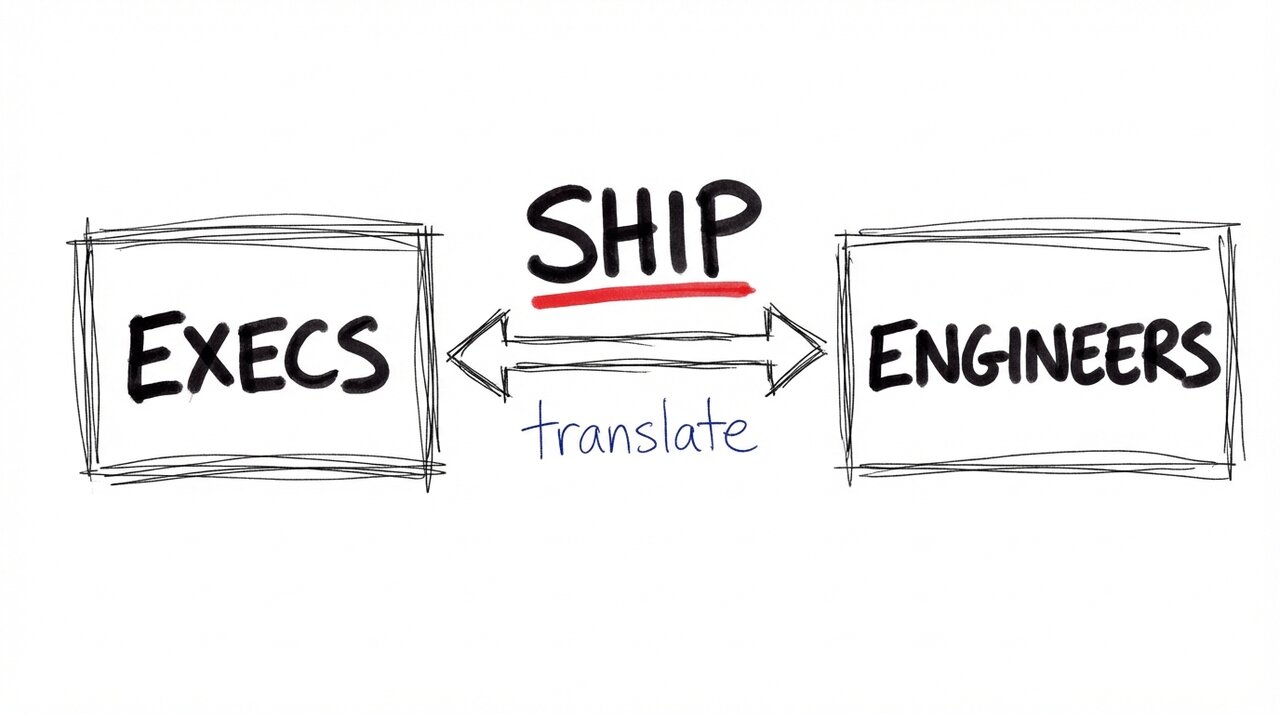

I go deep as a specialist. I connect dots as a generalist. I build production systems that keep working after launch. I translate between executives and engineers.

I ship under real constraints.

AI coding agents like Amp and Claude Code are the best tools I’ve ever used. They’re fast. They handle boilerplate. They let me explore more solutions in less time.

But they’re tools, not replacements.

AI engineering leadership where we ship real products, at a company that uses AI daily (or is ready to start).

Proof of Work

SnipSnap: Built an image recognition service with OpenCV. Users photographed products to find coupons. Real inputs, messy data. Led an API refactor to improve reliability and cut costs.

That taught me what “production” means: not deployed once, but running reliably while users do unexpected things.

First Look Media: Built security tools for investigative journalists. GPGSync for secure key distribution. A PDF parser that stripped metadata and made documents searchable. Security constraints. Adversarial file formats. Sharp edges everywhere.

IBM Watson: Designed and ran 150+ hands-on technical workshops. Spoke at conferences. Taught developers to build with Watson APIs. I learned why impressive AI fails to ship. The gap between a demo that wows the room and a product that earns trust is enormous. Most of it is integration, ops, metrics, and patience.

Jack Henry (5 years): Senior Developer Advocate for FinTech APIs. I sit between engineering and business, translating capabilities into decisions, and decisions into plans engineers can execute. Regulated industries. Complex stakeholders.

Consulting: Built a consumer marketplace MVP in Rails, shipped in four weeks: auth, listings, transactions, full buyer-to-seller flow. Built a custom data connector from Airtable to a data warehouse: ingestion, parsing, schema mapping. Ship fast or don’t get paid.

Personal Projects: Built my own AI orchestration layer, a REST API that normalizes across LLM providers, handles retries, and runs tool-calling loops. Built once, used everywhere.

What You Get

1. Production Software That Survives Reality

I build software that keeps working after launch.

I haven’t run massive model training pipelines. What I have is a decade of shipping production systems and a clear understanding of where AI is strong, where it’s brittle, and how to build around both.

I care about the parts that show up after the launch post:

- Graceful degradation when the model is wrong

- Cost control: caching, token budgets, rate limiting

- Observability that answers “why did it do that?”

- Eval pipelines that catch regressions before users do

- Latency trade-offs that prioritize user trust over cleverness

I build systems that degrade gracefully instead of lying confidently.

2. Process, Systems, and Influence

I haven’t managed people directly. No hiring, firing, or performance reviews.

What I do is lead through influence: set technical direction, build shared systems, make good judgment repeatable.

In practice:

- Turn ambiguous goals into plans engineers can execute

- Create lightweight operating systems: decision logs, review norms, release checklists

- Standardize “prototype to production” pathways with templates and reference architectures

- Define “what good looks like” so teams can self-evaluate

- Build eval harnesses that people actually use

You can’t scale AI by heroics. You scale it by turning good judgment into process.

3. Executive Translation

I’m good at the translation layer.

Five years of developer advocacy taught me to explain complex systems under time pressure to audiences with different incentives.

I can sit with executives and answer the real questions:

- What do we get if this works?

- What breaks if it fails?

- What does it cost to run?

- What risks are we taking?

- What should we stop doing?

Then I turn that into a plan engineers can build and a narrative stakeholders can fund.

Translation prevents wasted quarters.

Why AI Leadership Stalls

Most teams don’t fail because they lack smart people.

They fail because the structure fights the work.

Companies try to hire one “AI leader” to do two incompatible jobs. Then they wonder why it stalls.

Strategy and execution are different muscles.

One requires patience, alignment, and saying no.

The other requires speed, ownership, and shipping through ambiguity.

Load both onto one person and you get someone always behind on half the job. Either the roadmap drifts while they’re heads-down building, or the team spins while they’re in exec meetings.

The setup that works is two roles, not one. Someone who owns the why and where. Someone else who owns the how and ship.

I can sit in either seat. I stay close to the code because strategies need grounding in what’s buildable.

What I do best is making sure strategy and execution reinforce each other instead of fighting.

The only way to lead that kind of work is to keep your hands in the craft.

How I Stay Sharp

I learn by building.

The MLB Experiment

Built a Python pipeline (scrapers, feature engineering, scikit-learn) for DFS projections optimized for upside. Qualified for the World Fantasy Baseball Championship twice. Applied the same models to sports betting: my model says 67%, the book implies 56%, that gap is edge.

Proof I can take a vague curiosity, build a working system, and measure whether it’s actually working.

Building My Own Orchestration Layer

I built an orchestration layer: a REST API that normalizes across LLM providers, handles retries, and runs tool-calling loops. Same interface for Claude, GPT-5, Gemini. Call it from anywhere.

Building it taught me context is a budget, not a bucket. I implemented thresholds (80% warning, 90% block input, 95% force spin-off) because models get vague when context bloats.

Every insight gets baked in. A model excels at a task? Route to it. A prompting pattern clicks? Encode it. The runtime gets smarter every time I use it.

Extending the Tools

I don’t just use Claude Code and Amp. I build on top of them.

Custom skills turn one-off prompts into repeatable workflows: PRD generation, Rails bootstrapping, blog header images. Building skills taught me how agents actually work: context management, tool permissions, when to fork into isolated contexts.

It’s software engineering, not prompting. Productizing workflows, not chatting.

I want to work somewhere that rewards this builder mindset.

The Environment I Want

AI treated like a real tool, not a buzzword.

Whether you’re already shipping or still figuring out where to start, I want somewhere serious about using AI responsibly.

What I want:

- A team that ships quickly and measures reality

- Leaders who want clarity, not theater

- Tight collaboration and shared ownership

- Room to run small bets, then scale the winners

Industry doesn’t matter. How the team operates does.

What I don’t want:

- AI as a branding exercise

- Endless policy talk with no shipping

- “Innovation labs” with no path to production

- Teams where ownership is political instead of practical

Reach Out

Using AI in production and want to do more? Ready to start and need someone to define the strategy?

I’d like to talk.

I bring shipping discipline, systems thinking, and the ability to keep executives aligned while the team builds.

Email: sam@samcouch.com