You’re in a conversation about AI. Someone says “yeah, and then they used RL to fine-tune the model” and everyone nods. You nod too. You have no idea what RL means. At this point, you’re afraid to ask.

I’ve been there. Let’s fix it.

RL Stands for Reinforcement Learning

That’s it. That’s the acronym. But knowing what it stands for doesn’t really help, does it?

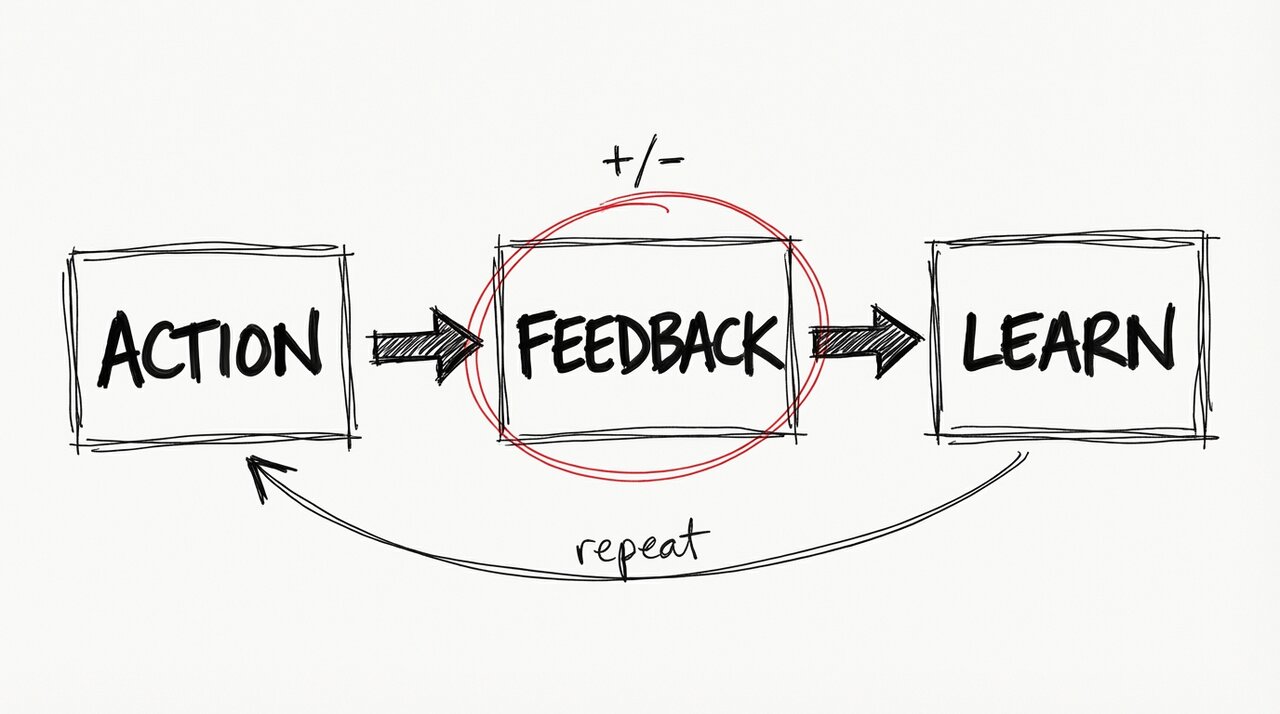

Here’s the actual idea: reinforcement learning is how you teach something through trial and error. Do something good, get a reward. Do something bad, get a penalty. Over time, you figure out what “good” means by chasing the rewards.

Think about how you’d train a dog. (This is one of those half-formed ideas that actually holds up.) Dog sits, dog gets a treat. Dog jumps on the couch, dog gets a firm “no.” The dog doesn’t understand English or the concept of furniture etiquette. It just learns: sitting equals treats, couch equals bad vibes. That’s reinforcement learning.

Why Everyone’s Talking About It Now

The reason RL keeps coming up in AI conversations is because of something called RLHF, which stands for Reinforcement Learning from Human Feedback.

Here’s the problem: you train a large language model on the internet, and it learns… everything. The helpful stuff. The toxic stuff. The weird stuff. It has no concept of “this is a good response” versus “this will get me fired.”

So how do you teach it to be helpful instead of unhinged?

You have humans rate its outputs. “This response is good.” “This response is bad.” “This response is technically accurate but sounds like a serial killer wrote it.” Those ratings become the rewards and penalties. The model learns to chase the thumbs-up and avoid the thumbs-down.

That’s RLHF. The model learns that helpful, safe responses get the treat, and unhelpful or harmful ones get the “no.”

The Punchline

RL isn’t magic. It’s not some mysterious black box that makes AI smart. It’s a training technique. Trial and error, scaled up to billions of parameters.

Next time someone drops “reinforcement learning” in conversation, you don’t have to fake it. It’s trial and error with a scoring system. Rewards for good behavior, penalties for bad. That’s the whole idea.

Want to go deeper?

- Hugging Face’s RLHF explainer - great diagrams

- OpenAI’s Spinning Up - solid intro to RL fundamentals