You fire up your AI coding agent. You paste in a massive prompt. Ten minutes later, it’s hallucinating file paths that don’t exist. You re-prompt. It breaks something else. By now you’ve spent more time babysitting than if you’d written the code yourself.

There’s a better approach, and it’s named after the dumbest kid in Springfield.

The Technique

Ralph Wiggum is the eternally confused character from The Simpsons. He tries hard, fails constantly, but never stops.

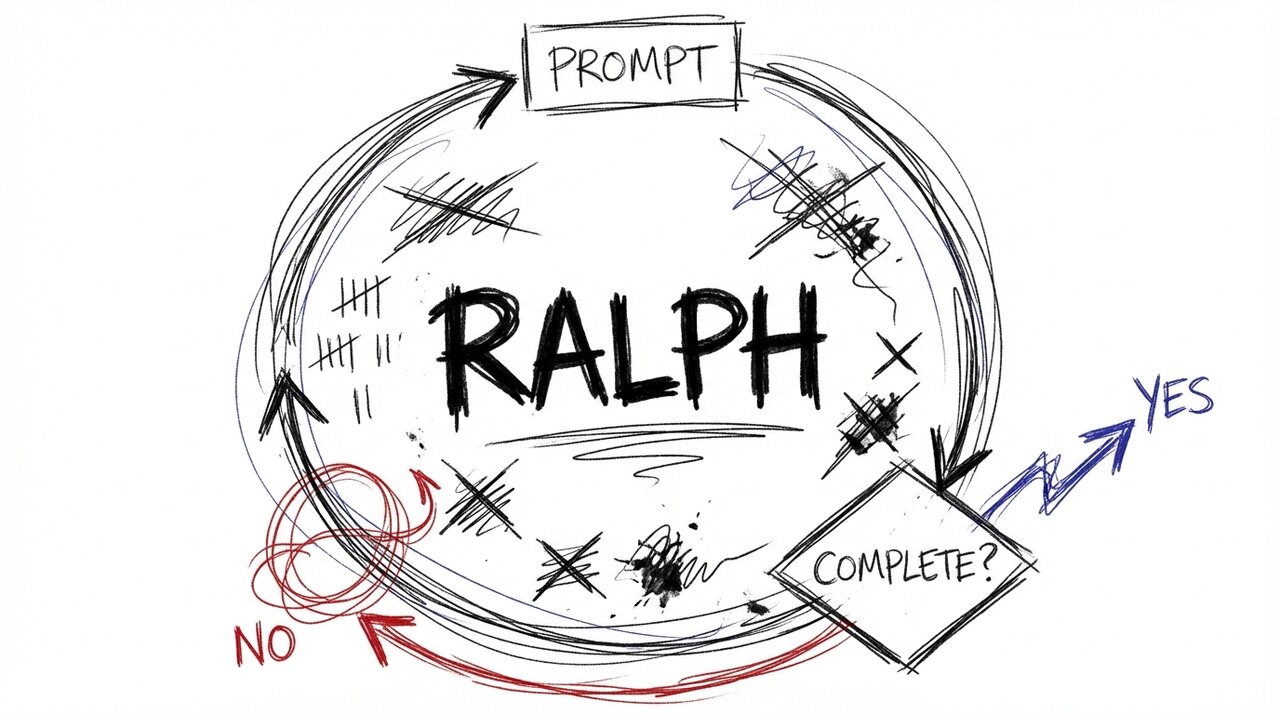

In mid-2025, Geoffrey Huntley turned that energy into a development methodology. Instead of asking an AI to nail a complex task in one shot, you put it in a loop and let it grind. Each iteration, it reads what it did before (via git history and text files), learns from its mistakes, and tries again.

The loop itself is predictable. The model is stochastic and flaky. The magic happens when you force the flaky thing through a predictable harness.

Why One-Shot Prompts Fail

For anything multi-step, giant system prompts fall apart:

- Context exhaustion. Large repos plus detailed specs blow past the context window. The model forgets earlier decisions.

- Premature completion. The model wants to finish the response, not finish the project.

- Half-finished features. Handlers created, routes not registered, tests not updated.

Anthropic’s research on long-running agents confirms this. Even frontier models drift and declare jobs done prematurely. Their solution: external state, clear task files, and structured progress logs.

Ralph is a hand-rolled version of that research.

The Loop

Ryan Carson’s implementation makes this concrete:

- Pipe a prompt into your AI agent

- Agent picks the next story from a PRD file

- Agent implements it and runs tests

- Agent commits if passing and marks the story done

- Loop repeats until all stories pass

#!/bin/bash# ralph.sh

MAX_ITERATIONS=${1:-10}SCRIPT_DIR="$(cd "$(dirname "${BASH_SOURCE[0]}")" && pwd)"

for i in $(seq 1 $MAX_ITERATIONS); do echo "Ralph Iteration $i of $MAX_ITERATIONS"

OUTPUT=$(cat "$SCRIPT_DIR/prompt.md" | amp --dangerously-allow-all 2>&1 | tee /dev/stderr) || true

if echo "$OUTPUT" | grep -q "<promise>COMPLETE</promise>"; then echo "Ralph completed all tasks!" exit 0 fidone

echo "Ralph reached max iterations."exit 1The key mechanism is the completion signal. You tell the model: “When all user stories are done and tests pass, output <promise>COMPLETE</promise>.” The loop checks for that string and exits when it appears.

01 Agent Agnostic

This pattern works with Claude Code, Cursor, or any CLI that accepts piped prompts. The loop is the technique. The agent is interchangeable.

The PRD Structure

Write a PRD as JSON with discrete user stories. Each story has clear acceptance criteria. Ralph works through them one at a time.

{ "project": "TaskPriority", "userStories": [ { "id": "US-001", "title": "Add priority field to database", "acceptanceCriteria": [ "Add priority column: 'high' | 'medium' | 'low'", "Migration runs successfully", "Typecheck passes" ], "passes": false } ]}The passes field is key. Ralph marks it true when the story is done. The loop keeps running until all stories pass.

The constraint: each story must fit in one context window. Small, focused stories. One database column. One API endpoint. One UI component.

The Memory Layer

Each context window starts fresh. How does Ralph maintain continuity? Two files.

progress.txt is session memory. An append-only log where Ralph writes what it did, what failed, and what’s next. Each iteration reads this file before deciding what to work on.

AGENTS.md is permanent memory. Coding conventions, gotchas, and patterns that Ralph should always follow. Amp (and other agents) automatically read this file.

Over time, AGENTS.md becomes your team’s “AI coding manual.” The agent converges toward your style without fine-tuning.

My Experience

I’ve been using Ryan Carson’s implementation with Amp. The first time I ran it, I expected chaos. What I got was a diligent, slightly slow junior developer narrating their work in progress.txt.

Here’s what I realized: this is my manual workflow, automated. When I’m coding with Amp interactively, I do exactly what Ralph does. Prompt. Check results. Provide feedback. Repeat. Ralph removes me from the loop.

The quality of output depends almost entirely on the quality of input. A vague PRD produces vague code. Tight acceptance criteria produce focused implementations. The upfront exploration work matters way more than clever prompt tricks.

What surprised me most: Ralph writes tests I would have skipped at 11pm. It follows repo conventions once AGENTS.md is solid. It refactors its own code after seeing failures.

When Ralph Works

Ralph shines on mechanical, well-specified work: CRUD endpoints, pattern migrations, test coverage, UI wiring.

Ralph struggles with: fuzzy product definitions, core architectural decisions, security-sensitive changes.

A useful heuristic: if you wouldn’t hand this task to an unsupervised junior dev overnight, don’t hand it to Ralph overnight.

02 Hybrid Mode

Start with 3-4 iterations, read progress.txt, adjust the PRD or AGENTS.md, then resume. Use Ralph for scaffolding and do the tricky bits yourself.

Getting Started

- Pick one contained feature in a repo you know well

- Write a small

prd.jsonwith 3-5 user stories - Create an

AGENTS.mdwith your coding conventions - Grab snarktank/ralph or write your own Bash loop

- Run it on a feature branch

- Review the diff, run tests, and merge (or nuke) as appropriate

The philosophy shift: iteration beats perfection. You’re not asking the model to be brilliant. You’re giving it a track to run on and letting it grind until it gets there.